LIVE Color+3D Database

The LIVE 3D databases are constructed in collaboration with Center for Perceptual Systems (CPS, http://www.cps.utexas.edu ).

Introduction

Color is an important and dense natural visual cue that is used by the brain to reconstruct both low-level and high-level visual percepts. The cone photoreceptors, which are densely distributed in the fovea centralis of the retina, capture and convey rich information both in space and time. While the cones themselves do not encode color, they do come in three types that have different spectral sensitivities. Hence, comparisons of the outputs of the different cone types by the retinal ganglion cells allow dense spatiotemporal chromatic information to be transmitted from the retina to the primary visual cortex (V1).

Color can be used at later visual processing stages to help infer large-scale shape information to better solve visual tasks by both humans and machine algorithms. Moreover, it has been demonstrated that the perception of color and depth are related, and that chromatic information can be used to improve the solution of stereo correspondence problems. Therefore, interaction and correlation between color and depth need further examination.

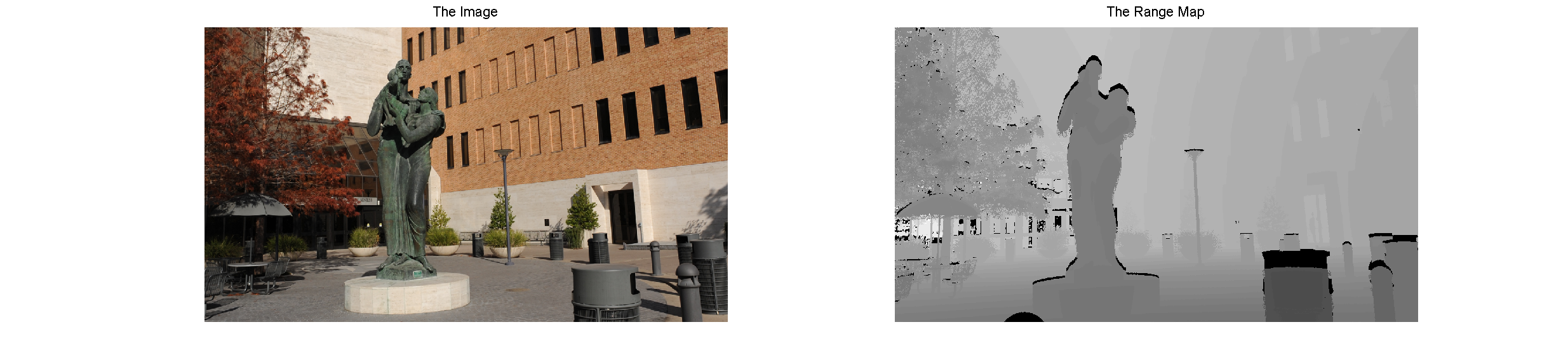

Towards obtaining a better understanding of the statistical relationships between color and range, we constructed the LIVE Color+3D Database.

Database Description

The LIVE Color+3D Database consists of accurately co-registered color images and ground-truth range maps at high-definition resolutions. The image and range data in the LIVE Color+3D Database were collected using an advanced range scanner, RIEGL VZ-400, with a Nikon D700 digital camera mounted on top of it. Since there are inevitable translational and rotational shifts when mounting the camera onto the range scanner, calibration is performed before data acquisition. The mounting calibration is done manually using the RIEGL RiSCAN PRO software, which is designed for scanner operation and data processing. Next, to acquire the image and range data in natural scenes, the device obtains distances by lidar reflection and waveform analysis as it rotates, and then the digital camera takes an optical photograph with the same field of view. The acquired range data are exported from the range scanner as point clouds with three-dimensional coordinates and range values, while the image data are stored in the digital camera. Finally, to obtain the aligned 2D range map with the 2D image, the 3D point clouds are projected and transformed into the 2D range map by applying the pinhole camera model with lens distortion. The natural environments where the image and range data were collected cover areas around Austin, Texas, including the campus at The University of Texas and the Texas State Capitol.

We are making the databases available to the research community free of charge. Please find the corresponding information below to download the databases. If you use these databases in your research, we kindly ask that you reference this webpage and our papers relevant to each database.

Please contact Che-Chun Su ( ccsu@utexas.edu ) if you have any questions.

Investigators

3D natural scene statistics research at LIVE is being conducted in collaboration with Center for Perceptual Systems (CPS, http://www.cps.utexas.edu ).

The investigators in this research are:

- Dr. Che-Chun Su ( ccsu@utexas.edu ) - Graduated from UT Austin in 2014

- Dr. Lawrence K. Cormack ( cormack@psy.utexas.edu ) - Professor, Department of Psychology at UT Austin

- Dr. Alan C. Bovik ( bovik@ece.utexas.edu ) - Professor, Department of ECE at UT Austin

- Dr. Brian McCann ( brian.c.mccann@utexas.edu )

- Dr. Johannes Burge ( jburge@sas.upenn.edu )

- Dr. Steve Sebastian ( sebastian@utexas.edu )

Download Database

- Release 1

- 12 sets of color images and dense ground-truth range maps

- High-definition resolution of 1280x720 for both color images and dense ground-truth range maps

If you use this database in your research, please reference the following papers:

- C.-C. Su, L. K. Cormack, and A. C. Bovik, "Color and depth priors in natural images," IEEE Transactions on Image Processing , vol. 22, no. 6, pp. 2259-2274, June 2013

- C.-C. Su, A. C. Bovik, and L. K. Cormack, "Natural scene statistics of color and range," IEEE International Conference on Image Processing , pp. 257-260, Sep. 2011.

Please fill this form to obtain the download link and the password.

- Release 2

- 98 sets of stereo color image pairs and dense ground-truth depth maps.

- High-definition resolution of 1920x1080 for both color images and dense ground-truth range maps

If you use this database in your research, please reference the following papers:

- C.-C. Su, L. K. Cormack, and A. C. Bovik, "Bayesian depth estimation from monocular natural images," Journal of Vision , vol. 17, no. 5 (22), pp. 1-29, May 2017.

Please fill this form to obtain the download link and the password.

Copyright Notice

---------- COPYRIGHT NOTICE STARTS WITH THIS LINE ----------

Copyright (c) 2017 The University of Texas at Austin

All rights reserved.Permission is hereby granted, without written agreement and without license or royalty fees, to use, copy, modify, and distribute this database (the images, the results and the source files) and its documentation for any purpose, provided that the copyright notice in its entirety appear in all copies of this database, and the original source of this database, Laboratory for Image and Video Engineering (LIVE, http://live.ece.utexas.edu ) and Center for Perceptual Systems (CPS, http://www.cps.utexas.edu ) at the University of Texas at Austin (UT Austin, http://www.utexas.edu ), is acknowledged in any publication that reports research using this database.

The following papers are to be cited in the bibliography whenever the database is used:

- C.-C. Su, L. K. Cormack, and A. C. Bovik, "Bayesian depth estimation from monocular natural images," Journal of Vision , vol. 17, no. 5 (22), pp. 1-29, May 2017.

- C.-C. Su, L. K. Cormack, and A. C. Bovik, "Color and depth priors in natural images," IEEE Transactions on Image Processing , vol. 22, no. 6, pp. 2259-2274, June 2013

- C.-C. Su, A. C. Bovik, and L. K. Cormack, "Natural scene statistics of color and range," IEEE International Conference on Image Processing , pp. 257-260, Sep. 2011.

- URL: http://live.ece.utexas.edu/research/3dnss/live_color_plus_3d.html

IN NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS DATABASE AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

-----------COPYRIGHT NOTICE ENDS WITH THIS LINE------------

THE UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE DATABASE PROVIDED HEREUNDER IS ON AN "AS IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN HAS NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

Back to 3D Natural Scene Statistics Research page