Video ATLAS: Video Assessment of TemporaL Artifacts and Stalls

Video ATLAS model

Introduction

Mobile streaming video data accounts for a large and increasing percentage of wireless network traffic. The available bandwidths of modern wireless networks are often unstable, leading to difficulties in delivering smooth, high-quality video. Streaming service providers such as Netflix and YouTube attempt to adapt their systems to adjust in response to these bandwidth limitations by changing the video bitrate or, failing that, allowing playback interruptions (rebuffering).

Being able to predict end users’ quality of experience (QoE) resulting from these adjustments could lead to perceptually-driven network resource allocation strategies that would deliver streaming content of higher quality to clients, while being cost effective for providers. Existing objective QoE models only consider the effects on user QoE of video quality changes or playback interruptions. For streaming applications, adaptive network strategies may involve a combination of dynamic bitrate allocation along with playback interruptions when the available bandwidth reaches a very low value.

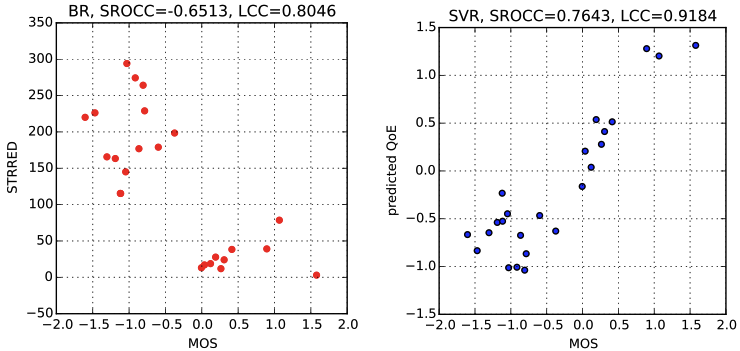

Towards more effectively predicting user QoE, we have developed a QoE prediction model called Video Assessment of TemporaL Artifacts and Stalls (Video ATLAS), which is a learning based approach that combines a number of QoE-related features, including perceptually-releveant quality features, rebuffering-aware features and memory-driven features to make QoE predictions. We evaluated Video ATLAS on the recently designed LIVE-Netflix Video QoE Database which consists of practical playout patterns, where the videos are afflicted by both quality changes and rebuffering events, and found that it provides improved performance over state-of-the-art video quality metrics while generalizing well on different datasets.

Download

The Video ATLAS software is available to the research community free of charge. If you use this software in your research, we kindly ask that you to cite our paper listed below:

- C. G. Bampis and A. C. Bovik, “Learning to Predict Streaming Video QoE: Distortions, Rebuffering and Memory,” submitted to Signal Processing: Image Communication.

- C. G. Bampis and A. C. Bovik, “Video ATLAS Software Release,” Online: http://live.ece.utexas.edu/research/Quality/VideoATLAS_release.zip, 2017.

You can download the publicly available software release by clicking THIS link.

Algorithm Description

The Video ATLAS model is a new QoE prediction model that integrates objective VQA metrics with rebuffering-related features and memory information to conduct overall QoE prediction. Video ATLAS uses 5 features:

1. To measure visual quality, we use the reduced-reference ST-RRED algorithm as an input feature (VQA feature). In recent experiments, we have discovered that ST-RRED is an exceptionally robust and high-performing video quality model when tested on a very wide spectrum of video quality datasets.

2. To account for the effects of rebuffering, we also included the length of each rebuffering event measured in seconds (R1) and number of rebuffering events (R2) as an input feature. The length of the rebuffering event(s) were normalized to the duration of each video.

3) While the previous features consider rebuffering and quality changes, we also computed the time (in sec.) per video over which a bitrate drop took place; following the simple notion that the relative amount of time that a video is more heavily distorted is directly related to the overall QoE. This feature (I) was normalized to the duration of each video.

4) To model the effects of memory/recency, we computed the time since the last rebuffering event or rate drop took place and was completed, viz., the number of seconds with normal playback at the maximum possible bitrate until the end of the video. This memory-related feature (M) was normalized to the duration of each video.

To integrate the various QoE-aware features, we used a Support Vector Regressor (SVR).

Flexibility of Video ATLAS

The proposed Video ATLAS model relies on the aforementioned 5 features and a SVR learning engine. However, its architecture is flexible to allow for the use of different features, video quality models, pooling strategies for the VQA feature and learning engines. For example, Video ATLAS allows the use of any full reference (FR) or no reference (NR) image/video quality model as appropriate for the application context. The publicly available software release allows for using video quality models such as MS-SSIM, GMSD, NIQE and others. It also allows for using a Random Forest regressor. For a more diverse set of usecases, we refer the interested researcher to the related paper.

Investigators

The investigators in this research are:

- Christos G. Bampis ( cbampis@gmail.com ) -- Graduate student, Dept. of ECE, UT Austin.

- Alan C. Bovik ( bovik@ece.utexas.edu ) -- Professor, Dept. of ECE, UT Austin

Copyright Notice

-----------COPYRIGHT NOTICE STARTS WITH THIS LINE------------

Copyright (c) 2017 The University of Texas at Austin

All rights reserved.

Permission is hereby granted, without written agreement and without license or royalty fees, to use, copy, modify, and distribute this software and its documentation for any purpose, provided that the copyright notice in its entirety appear in all copies of this software, and the original source of this software, Laboratory for Image and Video Engineering (LIVE,

http://live.ece.utexas.edu

) at the University of Texas at Austin (UT Austin,

http://www.utexas.edu

), is acknowledged in any publication that reports research using this software.

The following papers are to be cited in the bibliography whenever the software is used as:

- C. G. Bampis and A. C. Bovik, “Learning to Predict Streaming Video QoE: Distortions, Rebuffering and Memory,” submitted to Signal Processing: Image Communication.

IN NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS SOFTWARE AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

THE UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE SOFTWARE PROVIDED HEREUNDER IS ON AN "AS IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN HAS NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

-----------COPYRIGHT NOTICE ENDS WITH THIS LINE------------

Back to Quality Assessment Research page