Ian

van der Linde, Umesh

Rajashekar, Alan

C. Bovik, and Lawrence

K. Cormack

Laboratory for Image

& Video Engineering (LIVE)

Center for Perceptual

Systems, The University of

Texas at Austin

Introduction

DOVES

(a Database Of Visual Eye movementS) is a collection of eye movements

from 29 human observers as they viewed 101 natural calibrated

images. Recorded using a high-precision dual-Purkinje eye tracker,

the database consists of around 30,000 fixation points, and is

believed to be the first large-scale database of eye movements

to be made available to the vision research community. The database,

along with MATLAB functions for its use, may be download freely

here, and may be used without restriction for educational and

research purposes, providing that our paper/this website are cited

in any published work.

|

We have decided to make the data set available

to the vision research community free of charge. If you

use this database in your research, we kindly ask that

you reference this website and the following article:

The still images used in this experiment were selected

from the Natural

Stimuli Collection created by Hans van Hateren. The

images used are modified from the original natural image

stimuli, by cropping and using only the central 1024x768

pixels. Permission to release the modified images on this

site was graciously granted by van Hateren. In addition

to the above citation, we ask that you acknowledge van

Hateren's image database in your publications, following

the instructions provided in http://hlab.phys.rug.nl/archive.html.

The zipped files have been password protected.

Please email Ian van der Linde (ianvdl@bcs.org)

for the password. Kindly indicate your university/industry

affiliation and a brief description of how you plan to

use the database. |

Using

the database

Download

entire database here.

If you download this database, it is assumed that you agree to

this copyright agreement.

Folders

in Database:

- Images:

This folder contains the 101 natural image stimuli used in

our experiments.

- Fixations:

This folder contains the eye movement data for each image,

stored as a separate .mat file. Both raw eye movements and

computed fixations are stored in each .mat file. Run view_doves_fix.m

to see an example of how to use this data.

- RawData:

This folder contains, in plain text format, all the information

that was collected in the course of the experiment. Each file

corresponds to an observer. Besides the raw eye movement data,

you can obtain information for the memory task, results of

eye movement calibration, and other details of the experiment,

including mining for eye movement statistics. Since the information

is stored in plain text format, it will be compatible with

any user-written software and does not require the use of

MATLAB. If you prefer to use your own algorithm to compute

fixations, you may use the raw eye movement data from the

Fixations folder or from this folder.

- Code:

This folder contains a selection of MATLAB programs that will

help you access the data in DOVES.

- view_doves_fix.m:

This program illustrates how to read in an image, the

corresponding eye movement data from the Fixations

folder. The eye movement data for each subject is then

superimposed on the image.

- view_doves_fixduration.m:

A program to illustrate extraction of fixation duration

- view_doves_raweye.m:

This program illustrates how to read in the raw eye movement

data stored in the RawData follder. This information

is also accessible from the data in the Fixations

folder.

- view_doves_rawcalib.m:

This program illustrates extraction of just the eye movement

calibration data from the RawData folder.

- view_task_results.m:

A program that reads in the results of the yes-no memory

task, and counts the number of hits, misses, correct rejections,

and false alarms.

Examples

from the database

Original Image

|

|

|

|

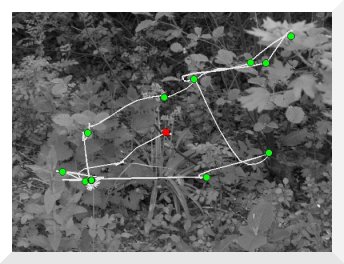

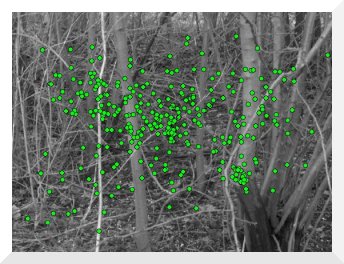

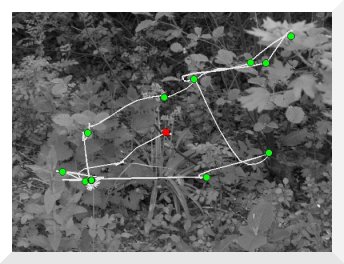

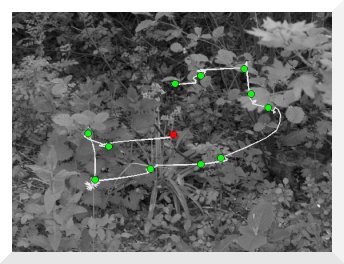

Fixations of first subject. Red square shows first fixation.

Green dots show computed fixations. White line shows the

"raw" eye movement trace. |

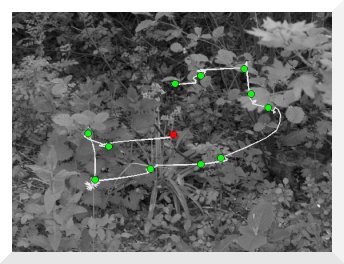

Fixations

of second subject. Note how both subjects fixate on the

flower as expected. |

- The user

can also analze the fixation duration for a subject or collect

fixations of all subjects for a single image

|

|

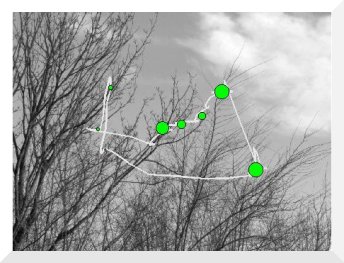

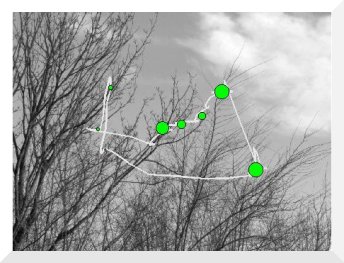

| An

example of fixation duration is indicated by the size of the

circles. |

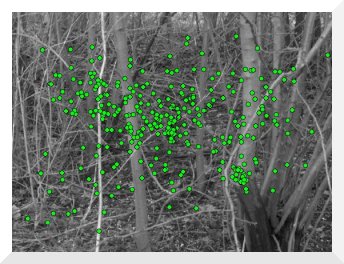

An

example of fixations from all subjects on an image |

- Example

of the eye tracker "losing track": If you plan to

use your own code to generate fixations from the eye movement

data, please be aware that, the tracker may have occasionally

lost track of the observer's eyes. This can be seen in the eye

movement trace as a repeated vertical sinusoidal path. Your

fixation algorithm should be able to ignore this, since its

spatio-temporal dynamics do not resemble those of a human fixation.

As can be seen in the following figure, our fixation algorithm

does not compute fixations in the sinusoidal data, but captures

fixations otherwise.

|

An

example of the eye tracker losing ability to track |

|

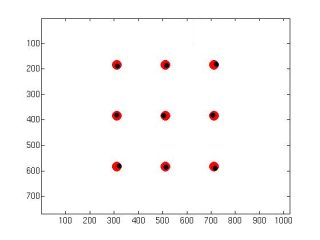

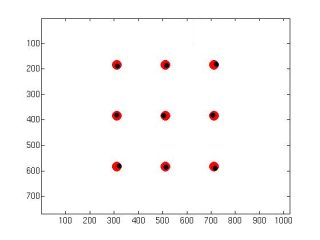

An example of the eye tracker calibration. The red dots

show the location of calibration locations. Black dots are

the subject's recorded eye positions when looking at the

red dots. |

-----------COPYRIGHT

NOTICE STARTS WITH THIS LINE------------

Copyright © 2007 The University of Texas at Austin

All rights reserved.

Permission

is hereby granted, without written agreement and without

license or royalty fees, to use, copy, modify, and distribute

this database (the eye movement data only) and its documentation

for any purpose, provided that the copyright notice in

its entirety appear in all copies of this database, and

the original source of this database, Laboratory for Image

and Video Engineering (LIVE, http://live.ece.utexas.edu)

and Center for Perceptual Systems (CPS, http://www.cps.utexas.edu)

at the University of Texas at Austin (UT Austin, http://www.utexas.edu),

is acknowledged in any publication that reports research

using this database. The database is to be cited in the

bibliography as:

The still images used in this experiment were selected

from the

Natural Stimuli Collection created by Hans van Hateren.

The images used are modified from the original natural

image stimuli, by cropping and using only the central

1024x768 pixels. Permission to release the modified images

on this site was graciously granted by van Hateren. In

addition to the above citation, we ask that you acknowledge

van Hateren's image database in your publications, following

the instructions provided in http://hlab.phys.rug.nl/archive.html.

IN

NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE

TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL,

OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS

DATABASE AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY

OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY

OF SUCH DAMAGE.

THE

UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY

WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED

WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

PURPOSE. THE DATABASE PROVIDED HEREUNDER IS ON AN "AS

IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN

HAS NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES,

ENHANCEMENTS, OR MODIFICATIONS.

-----------COPYRIGHT

NOTICE ENDS WITH THIS LINE------------

|

|