Welcome to the LIVE-Meta Visually Imapaired UGC Database

LIVE-Meta VI-UGC Database

Introduction

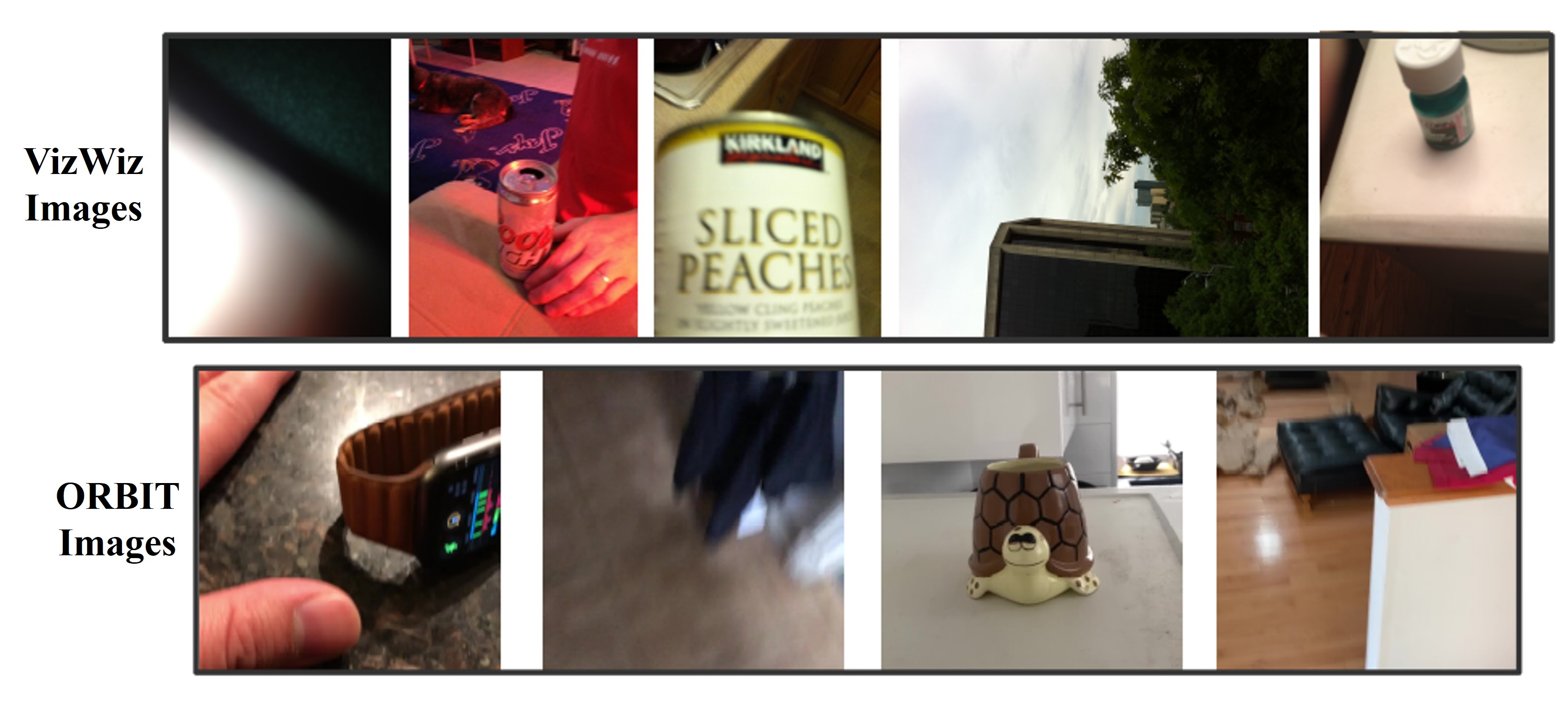

Perception-based image analysis technologies can be used to help visually impaired people take better quality pictures by providing automated guidance, thereby empowering them to interact more confidently on social media. The photographs taken by visually impaired users often suffer from one or both of two kinds of quality issues: technical quality (distortions), and semantic quality, such as framing and aesthetic composition. Here we develop tools to help them minimize occurrences of common technical distortions, such as blur, poor exposure, and noise. The problem of assessing, and providing actionable feedback on the technical quality of pictures captured by visually impaired users is hard enough, owing to the severe, commingled distortions that often occur. To advance progress on the problem of analyzing and measuring the technical quality of visually impaired user-generated content (VI-UGC), we built a very large and unique subjective image quality and distortion dataset. This new perceptual resource, which we call the LIVE-Meta VI-UGC Database, contains 39,660 real-world distorted VI-UGC images and 39,660 patches, on which we recorded 2.7 million human perceptual quality judgments and 2.7 million distortion labels. The new LIVE-Meta VI-UGC dataset is also the largest publicly available distortion classification dataset. Further, as a control, we also collected about 75,000 ratings on 2.2K frames from videos captured by visually impaired photographers, which are provided in the ORBIT dataset. We anticipate that the dataset will be a valuable resource for researchers to develop better models of perceptual quality for VI-UGC media.

Download

We are making the LIVE-Meta VI-UGC Image Quality Assessment Database available to the research community free of charge. If you use this database in your research, we kindly ask that you to cite our paper listed below:

- M. Mandal, D. Ghadiyaram, D. Gurari and A. C. Bovik, "Helping Visually Impaired People Take Better Quality Pictures," in IEEE Transactions on Image Processing, vol. 32, pp. 3873-3884, 2023, doi: 10.1109/TIP.2023.3282067.

You can download the publicly available release of the database by filling THIS form. The password and link to the database will be sent to you once you complete the form.

Database Description

The proposed LIVE-Meta VizWiz dataset contains 39,660 images, along with 39,660 patches extracted from them, half of which are salient patches, and the other half randomly cropped. We also collected 2.7 million ratings and distortion labels on the images, and equal numbers on the patches. From the ORBIT dataset, we selected 59 types of objects at random, and from each we selected two videos (one clean and one cluttered). We then sampled each video at one frame per second, collecting a total of 2,235 frame images in total. Each image in the ORBIT sub-dataset has the same spatial dimensions as the original videos (1080x1080). As before, we then collected global quality ratings on these to form the auxiliary LIVE-ORBIT frame quality dataset.

Investigators

The investigators in this research are:

- Maniratnam Mandal ( mmandal@utexas.edu ) -- Graduate student, Dept. of ECE, UT Austin.

- Deepti Ghadiyaram ( deeptigp@fb.com ) -- Meta AI Research

- Danna Gurari ( danna.gurari@colorado.edu ) -- Professor, Dept. of CS, UC Boulder

- Alan C. Bovik ( bovik@ece.utexas.edu ) -- Professor, Dept. of ECE, UT Austin

Copyright Notice

-----------COPYRIGHT NOTICE STARTS WITH THIS LINE------------

Copyright (c) 2016 The University of Texas at Austin

All rights reserved.

Permission is hereby granted, without written agreement and without license or royalty fees, to

use, copy, modify, and distribute this database (the videos, the results and the source files)

and its documentation for any purpose, provided that the copyright notice in its entirety appear

in all copies of this database, and the original source of this database, Laboratory for Image

and Video Engineering (LIVE,

http://live.ece.utexas.edu

) at the University of Texas at Austin (UT Austin,

http://www.utexas.edu

), is acknowledged in any publication that reports research using this database.

The following papers are to be cited in the bibliography whenever the database is used as:

- M. Mandal, D. Ghadiyaram, D. Gurari and A. C. Bovik, "Helping Visually Impaired People Take Better Quality Pictures," in IEEE Transactions on Image Processing, vol. 32, pp. 3873-3884, 2023, doi: 10.1109/TIP.2023.3282067.

IN NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT,

SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS DATABASE AND ITS

DOCUMENTATION, EVEN IF THE UNIVERSITY OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY OF

SUCH DAMAGE.

THE UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT

LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE

DATABASE PROVIDED HEREUNDER IS ON AN "AS IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN HAS NO

OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

-----------COPYRIGHT NOTICE ENDS WITH THIS LINE------------

Back to Quality Assessment Research page