Automated Quality Curation of Video Collections

T. Goodall, M. Esteva, S. Sweat, and A. C. Bovik

To assess how well large collections of videos are handled by latest I/VQA algorithms, we first conducted a small-scale study using video clips. Each video clips was extracted from different video sources. The quality of the source videos ranged from pristine to distorted with any number of unknown distortions.

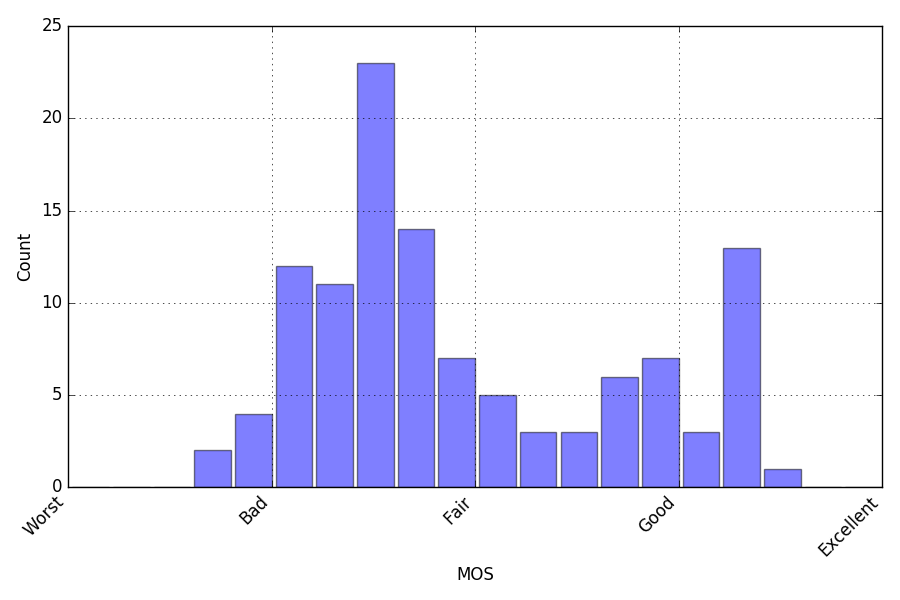

These clips were scored by human subjects to compute Mean Opinion Scores (MOS). As Fig. 1 shows, the distribution of these MOS scores indicates a higher frequency of both bad and good quality videos. After inspection, this is representative of our larger video corpus.

|

| Figure 1: Distribution of MOS scores. |

Unfortunately, we cannot release the video clips due to content license restrictions. Instead, we release the features computed on these video clips for BRISQUE and Video BLIINDS along with MOS scores gathered from the associated subjective test. This will allow video researchers to train their own models and compare against other datasets.

NOTE: before testing with your own collection, all input videos MUST be scaled to the Nexus 9 tablet resolution (2048x1536), while maintaining video aspect ratio. We chose bilinear upscaling for extracting the feature sets.

-----------COPYRIGHT NOTICE STARTS WITH THIS LINE------------

Copyright (c) 2015 The University of Texas at Austin

All rights reserved.

Permission is hereby granted, without written agreement and without license or royalty fees, to use, copy, modify, and distribute this database (the images, the results and the source files) and its documentation for any purpose, provided that the copyright notice in its entirety appear in all copies of this database, and the original source of this database, Laboratory for Image and Video Engineering (LIVE,

http://live.ece.utexas.edu

) at the University of Texas at Austin (UT Austin,

http://www.utexas.edu

), is acknowledged in any publication that reports research using this database.

The following papers are to be cited in the bibliography whenever the data is used as:

- T. Goodall, M. Esteva, S. Sweat, and A. C. Bovik. "Towards Automated Quality Curation of Video Collections from a Realistic Perspective," Joint Conference on Digital Libraries (JCDL), submitted Feb 2017

IN NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS DATABASE AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

THE UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE DATABASE PROVIDED HEREUNDER IS ON AN "AS IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN HAS NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

-----------COPYRIGHT NOTICE ENDS WITH THIS LINE------------

The data can be obtained using the following links:

- Raw extracted features on video dataset: BRISQUE, Video BLIINDS

- MOS gathered on video dataset: scores