Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging

The Perceptual Fog Density Assessment and Image Defogging Research at LIVE are being conducted in collaboration with Hongik Univ.

Introduction

We propose a referenceless perceptual fog density prediction model based on natural scene statistics (NSS) and fog aware statistical features. The proposed model, called Fog Aware Density Evaluator (FADE), predicts the visibility of a foggy scene from a single image without reference to a corresponding fog-free image, without dependency on salient objects in a scene, without side geographical camera information, without estimating a depth dependent transmission map, and without training on human- rated judgments. FADE only makes use of measurable deviations from statistical regularities observed in natural foggy and fog-free images.

Fog aware statistical features that define the perceptual fog density index derive from a space domain NSS model and the observed characteristics of foggy images. FADE not only predicts perceptual fog density for the entire image but also provides a local fog density index for each patch. The predicted fog density using FADE correlates well with human judgments of fog density taken in a subjective study on a large foggy image database.

As applications, FADE not only accurately assesses the performance of defogging algorithms designed to enhance the visibility of foggy images, but also is well suited for image defogging. A new FADE based referenceless perceptual image defogging, dubbed DEnsity of Fog Assessment-based DEfogger (DEFADE) achieves better results for darker, denser foggy images as well as on standard foggy images than state of the art defogging methods.

LIVE Image Defogging Database

Natural fog-free, foggy, and test images used in FADEHuman Subjective study

Test images One hundred color images were selected to capture adequate diversity of image content and fog density from newly recorded foggy images, well-known foggy test images (none contained in the corpus of 500 foggy images) and corresponding defogged images. Some images were captured by a surveillance camera, while others were recorded on the same scene under a variety of fog density conditions. The image sizes varied from 425 × 274 to 1024 × 768 pixels.

Test methodology 1) Subjects: A total of 20 naive students at The University of Texas at Austin attended the subjective study.

2) Equipment and Display Configuration: We developed the user interface for the study on a Windows PC using MATLAB and the Psychophysics toolbox, which interfaced with a NVIDIA GeForce GT640M graphics card in an Intel® CoreTM i7-3612QM CPU @2.10GHz processor, with 8GB RAM. The screen was set at a resolution of 1920 × 1080 pixels at 60Hz, while the test images were displayed at the center of the 15" LCD monitor (Dell, Round Rock, TX, USA) for 8 seconds at their native image resolution to prevent any distortions due to scaling operations performed by software or hardware. No errors such as latencies were encountered while displaying the images. The remaining areas of the display were black.Subjects viewed the monitor from an approximate viewing distance of about 2.25 screen heights.

3) Screenshot of the subjective study interface

Download

We are making the LIVE Image Defogging Database available to the research community free of charge. If you use this database in your research, we kindly ask that you reference our papers listed below:

L. K. Choi, J. You, and A. C. Bovik, "Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging," IEEE Transactions on Image Processing, vol. 24, no. 11, pp. 3888-3901, Nov. 2015. (PDF) L. K. Choi, J. You, and A. C. Bovik, "Referenceless perceptual image defogging," IEEE Southwest Symposium on Image Analysis and Interpretation, Apr. 2014. (PDF) L. K. Choi, J. You, and A. C. Bovik, "Referenceless perceptual fog density prediction model," SPIE Human Vision and Electronic Imaging, Feb. 2014. (PDF) L. K. Choi, J. You, and A. C. Bovik, "LIVE Image Defogging Database," Online: http://live.ece.utexas.edu/research/fog/fade_defade.html, 2015.

If you want to download the database, please fill THIS FORM and the information will be sent to you. For questions, please contact Lark Kwon Choi (larkkwonchoi@utexas.edu).

LIVE Perceptual Fog Density Prediction and Defogging Algorithms

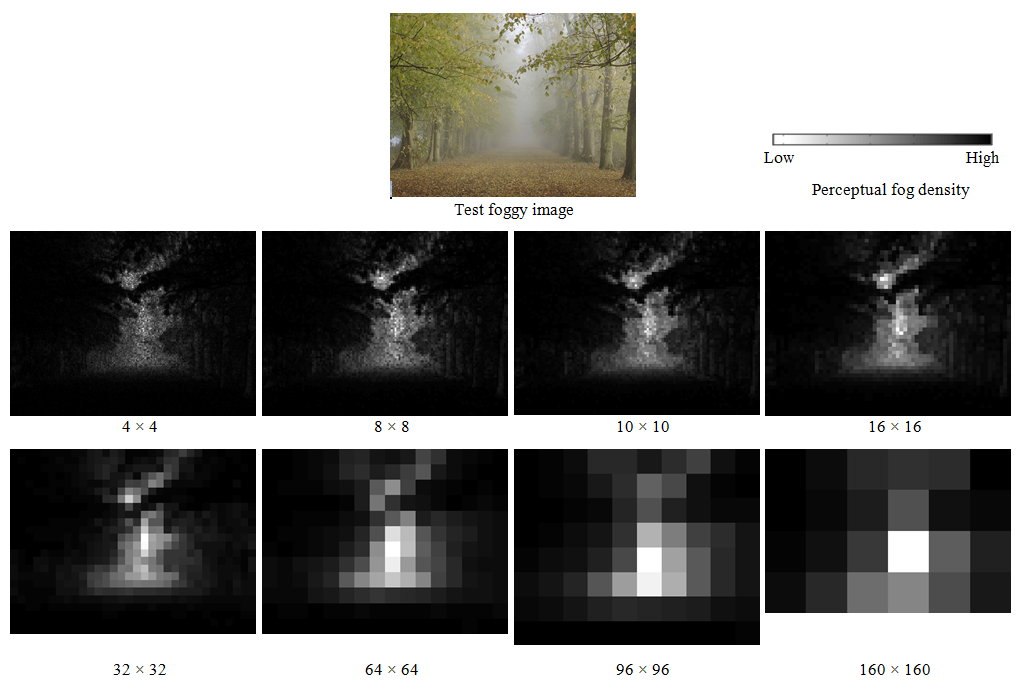

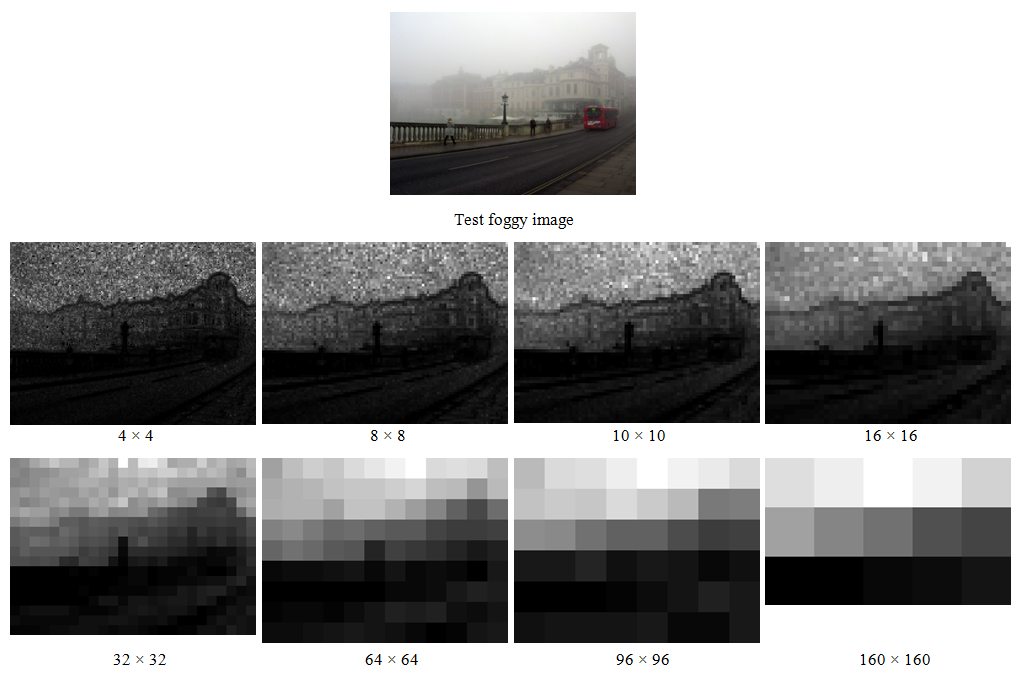

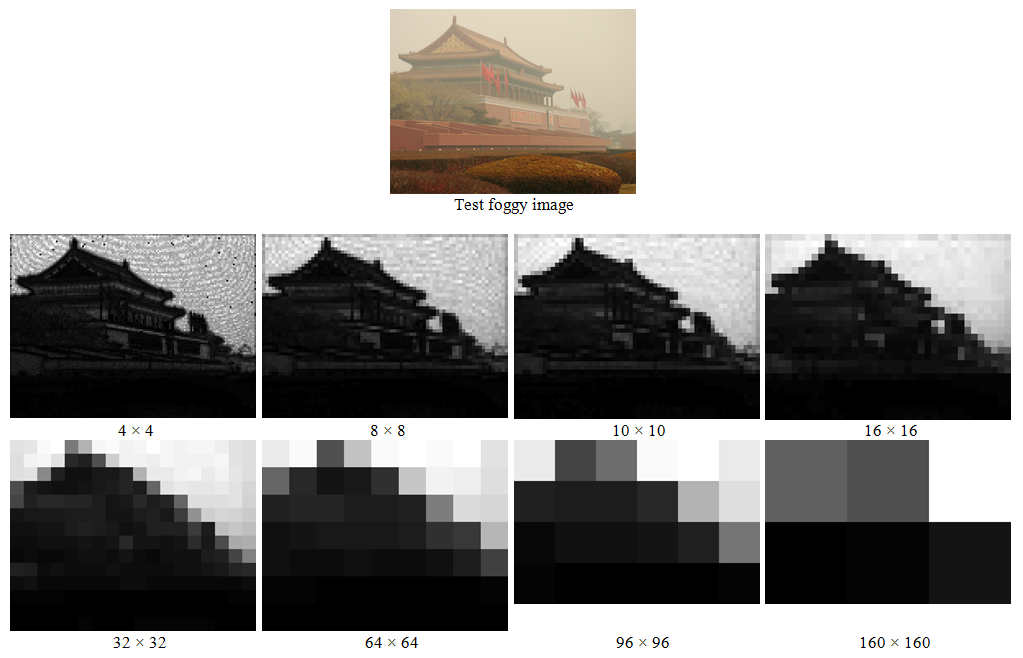

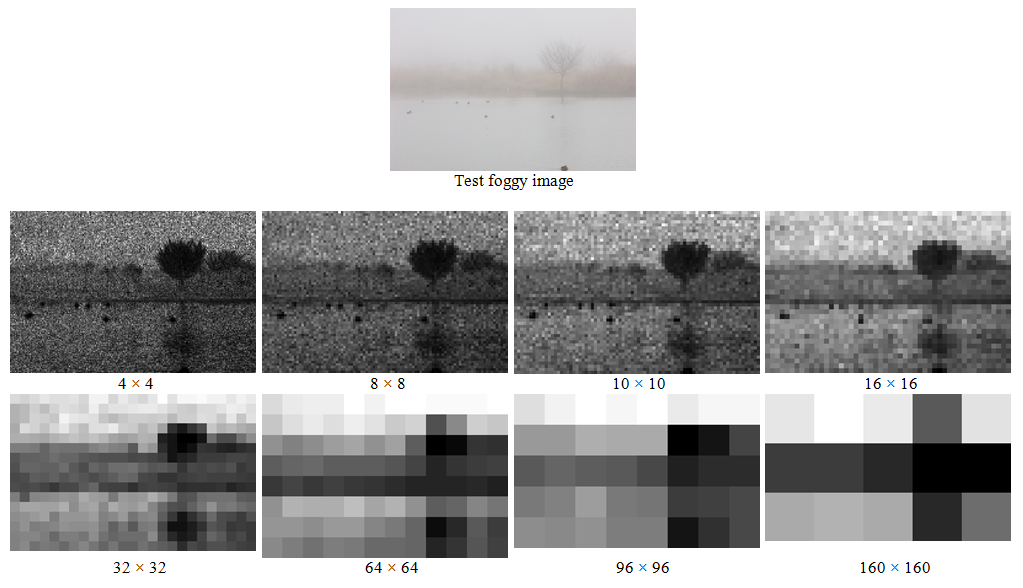

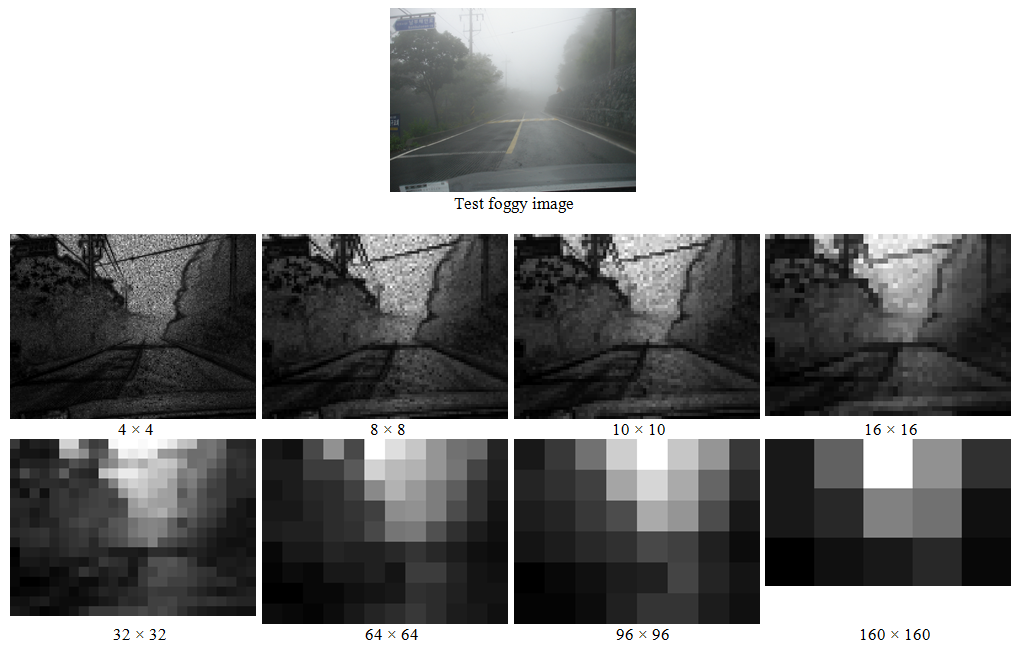

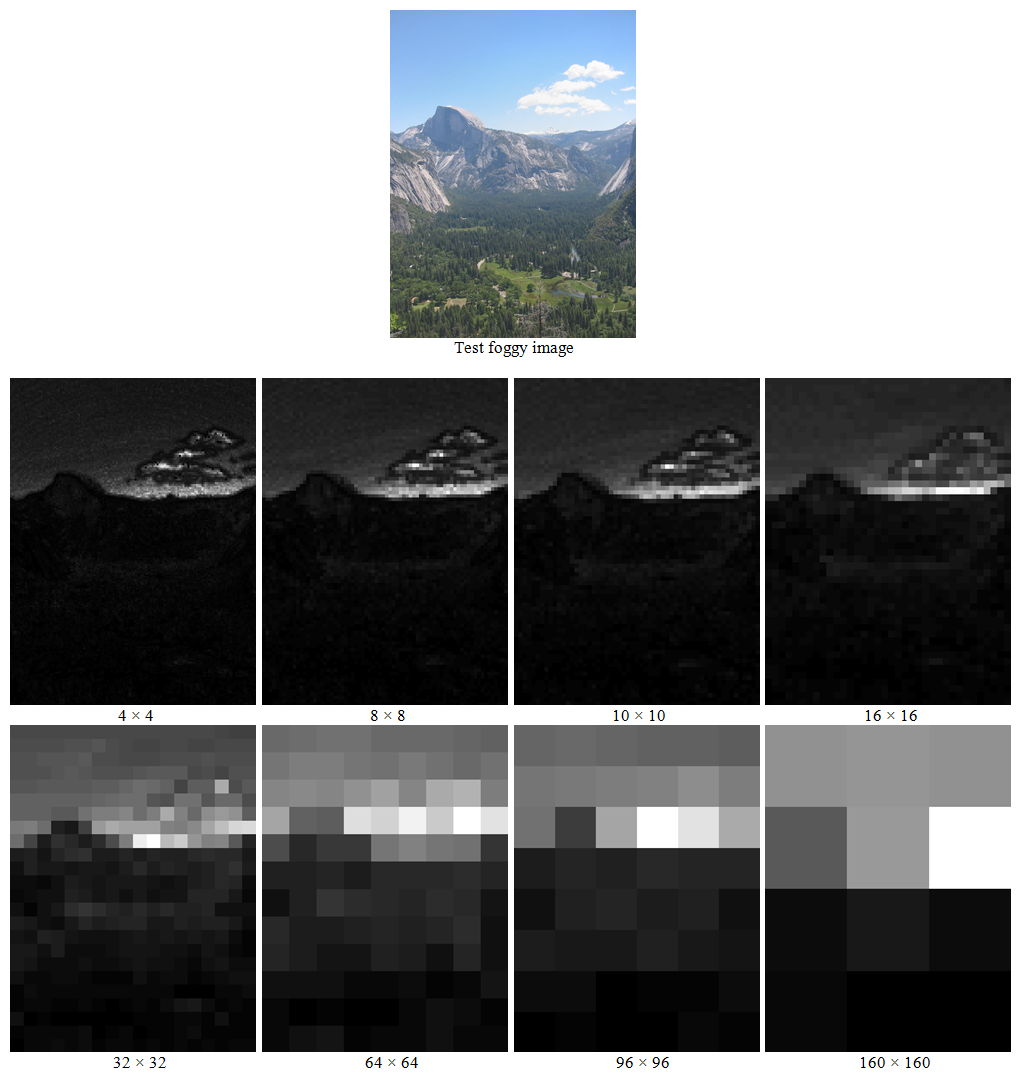

Prediction of perceptual fog density using FADE

Results of the proposed perceptual fog density prediction model FADE over patch sizes ranging from 4 × 4 to 160 × 160 pixels. The predicted perceptual fog density is indicated by gray levels ranging from black (low density) to white (high density).

Perceptual image defogging using DEFADE (click to see full resolution images)

The proposed defogging and visibility enhancer DEFADE utilizes statistical regularities observed in foggy and fog-free images to extract visible information from three preprocessed images: one white balanced and two contrast enhanced images. Chrominance, saturation, saliency, perceptual fog density, fog aware luminance, and contrast weight maps are applied on the preprocessed images using Laplacian multi-scale refinement.

(f)

(g)

(a) (b) (c) (d) (e) Overall sequence of processes comprising DEFADE on example images. (a) Input foggy image I. (b) Preprocessed images: white balanced image I1, contrast enhanced image after mean subtraction I2, and fog aware contrast enhanced image I3, from top to bottom. (c) Weight maps: the first, second, and third rows are weight maps on preprocessed images I1, I2, and I3, respectively. Chrominance, saturation, saliency, perceptual fog density, luminance, contrast, and normalized weight maps are shown from left to right column. (d) Laplacian pyramids of the preprocessed images I1, I2, and I3, from top to bottom. (e) Gaussian pyramids of the normalized weight maps corresponding to I1, I2, and I3, from top to bottom. (f) Multi-scale fused pyramid Fl, where l = 9. (g) Output defogged image.

Investigators

The investigators in this research are:

- Dr. Lark Kwon Choi ( larkkwonchoi@gmail.com ) -- Graduated from UT Austin in 2015.

- Dr. Jaehee You ( jaeheeu@hongik.ac.kr ) -- Professor, Dept. of ECE, Hongik University

- Dr. Alan C. Bovik ( bovik@ece.utexas.edu ) -- Professor, Dept. of ECE, UT Austin

Copyright Notice

-----------COPYRIGHT NOTICE STARTS WITH THIS LINE------------

Copyright (c) 2015 The University of Texas at Austin

All rights reserved.

Permission is hereby granted, without written agreement and without license or royalty fees, to use, copy, modify, and distribute this database (the images, the results and the source files) and its documentation for any purpose, provided that the copyright notice in its entirety appear in all copies of this database, and the original source of this database, Laboratory for Image and Video Engineering (LIVE, http://live.ece.utexas.edu ) at the University of Texas at Austin (UT Austin, http://www.utexas.edu ), is acknowledged in any publication that reports research using this database.The following papers are to be cited in the bibliography whenever the database is used as:

- L. K. Choi, J. You, and A. C. Bovik, "Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging," IEEE Transactions on Image Processing, vol. 24, no. 11, pp. 3888-3901, Nov. 2015. (PDF)

- L. K. Choi, J. You, and A. C. Bovik, "Referenceless perceptual image defogging," IEEE Southwest Symposium on Image Analysis and Interpretation, Apr. 2014. (PDF)

- L. K. Choi, J. You, and A. C. Bovik, "Referenceless perceptual fog density prediction model," SPIE Human Vision and Electronic Imaging, Feb. 2014. (PDF)

- L. K. Choi, J. You, and A. C. Bovik, "LIVE Image Defogging Database," Online: http://live.ece.utexas.edu/research/fog/fade_defade.html, 2015.

IN NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS DATABASE AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

THE UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE DATABASE PROVIDED HEREUNDER IS ON AN "AS IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN HAS NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.-----------COPYRIGHT NOTICE ENDS WITH THIS LINE------------

Back to Perceptual Fog Density Assessment and Image Defogging Research page