Welcome to NVS NAR-IQA Benchmark: Non-Aligned Reference Image Quality Assessment Database for Novel View Synthesis

NVS-NAR-IQA Database

Introduction

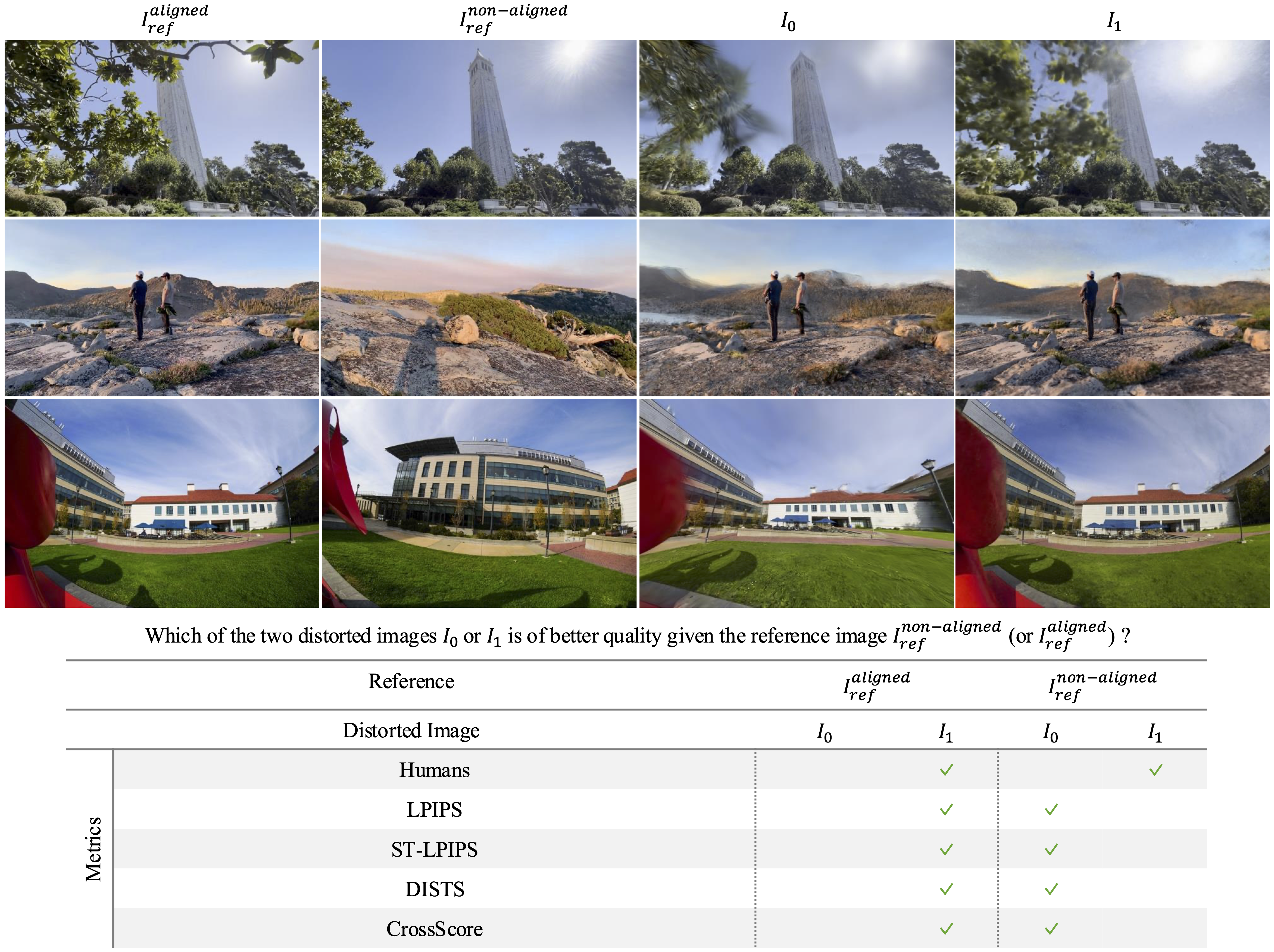

Assessing the perceptual quality of Novel View Synthesis (NVS) outputs poses unique challenges when pixel-aligned ground truth references are unavailable. Traditional Full-Reference (FR) IQA methods fail under spatial misalignment, while No-Reference (NR) approaches struggle to generalize across unseen distortions. To address this gap, we introduce the NVS NAR-IQA Benchmark, the first large-scale benchmark specifically designed for Non-Aligned Reference IQA (NAR-IQA) in the context of NVS. The dataset evaluates distorted novel views against nearby, non-aligned reference images, reflecting realistic deployment scenarios where perfect ground truth is rarely available.

Download

We are making the NVS NAR-IQA Benchmark available to the research community free of charge. If you use this dataset in your research, we kindly ask that you cite our paper listed below.

- Abhijay Ghildyal, Rajesh Sureddi, Nabajeet Barman, Saman Zadtootaghaj, and Alan C. Bovik. Non-Aligned Reference Image Quality Assessment for Novel View Synthesis. Submitted to the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025.

- Abhijay Ghildyal, Rajesh Sureddi, Nabajeet Barman, Saman Zadtootaghaj, and Alan C. Bovik., "Non-Aligned Reference Image Quality Assessment for Novel View Synthesis," Online: https://live.ece.utexas.edu/research/NVS_NAR_IQA/NVS-NAR-IQA.html, 2025.

Download Link Here ! Please fill the Google Form to get access to the database.

Database Description

- Scenes and Models:

NVS NAR-IQA Benchmark was built by training three NeRF models and two Gaussian Splatting models across 17 diverse scenes from the NeRFStudio dataset. - Train/Test Configurations:

We employed four train–test splits (90–10, 80–20, 70–30, 50–50), resulting in 340 trained models. - Triplets:

Each triplet contains:- Two distorted novel views (from different NeRF/GS models)

- One reference view (either aligned or a nearby, non-aligned view from the same video)

- 1,750 high-quality triplets, then

- 1,035 final triplets after removing ambiguous cases.

- User Study:

We conducted a subjective study with five expert annotators experienced in IQA/VQA. Annotators selected which distorted view exhibited higher visual quality relative to the reference. Only triplets with strong inter-rater agreement were retained. - Final Dataset:

The NVS NAR-IQA Benchmark contains 1,035 curated triplets covering a wide variety of scenes, distortion types, and severity levels, providing a robust foundation for perceptual quality evaluation under non-alignment.

Investigators

The investigators in this research are:

- Abhijay Ghildyal (Sony Interactive Entertainment) – abhijay.ghildyal@sony.com

- Rajesh Sureddi (University of Texas at Austin) – rajesh.sureddi@utexas.edu

- Nabajeet Barman (Sony Interactive Entertainment) – n.barman@ieee.org

- Saman Zadtootaghaj (Sony Interactive Entertainment) – saman.zadtootaghaj@sony.com

- Alan C. Bovik (University of Texas at Austin) – bovik@ece.utexas.edu

Copyright Notice

-----------COPYRIGHT NOTICE STARTS WITH THIS LINE------------

Copyright (c) 2025 The University of Texas at Austin

All rights reserved.

Permission is hereby granted, without written agreement and without license or royalty fees, to use, copy, modify, and distribute this database (the videos, the results and the source files) and its documentation for any purpose, provided that the copyright notice in its entirety appear in all copies of this database, and the original source of this database, Laboratory for Image and Video Engineering (LIVE,

http://live.ece.utexas.edu

) at the University of Texas at Austin (UT Austin,

http://www.utexas.edu

), is acknowledged in any publication that reports research using this database.

The following paper/website are to be cited in the bibliography whenever the database is used as:

- Abhijay Ghildyal, Rajesh Sureddi, Nabajeet Barman, Saman Zadtootaghaj, and Alan C. Bovik, Non-Aligned Reference Image Quality Assessment for Novel View Synthesis.

- Abhijay Ghildyal, Rajesh Sureddi, Nabajeet Barman, Saman Zadtootaghaj, and Alan C. Bovik, "Non-Aligned Reference Image Quality Assessment for Novel View Synthesis," Online: https://live.ece.utexas.edu/research/NVS_NAR_IQA/NVS-NAR-IQA.html, 2025.

IN NO EVENT SHALL THE UNIVERSITY OF TEXAS AT AUSTIN BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE USE OF THIS DATABASE AND ITS DOCUMENTATION, EVEN IF THE UNIVERSITY OF TEXAS AT AUSTIN HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

THE UNIVERSITY OF TEXAS AT AUSTIN SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE DATABASE PROVIDED HEREUNDER IS ON AN "AS IS" BASIS, AND THE UNIVERSITY OF TEXAS AT AUSTIN HAS NO OBLIGATION TO PROVIDE MAINTENANCE, SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

-----------COPYRIGHT NOTICE ENDS WITH THIS LINE------------

Back to Quality Assessment Research page